李林超博客

首页

归档

留言

友链

动态

关于

归档

留言

友链

动态

关于

首页

大数据

正文

06.【转载】Dataset (DataFrame) 的基础操作(二)

Leefs

2021-07-16 PM

1888℃

0条

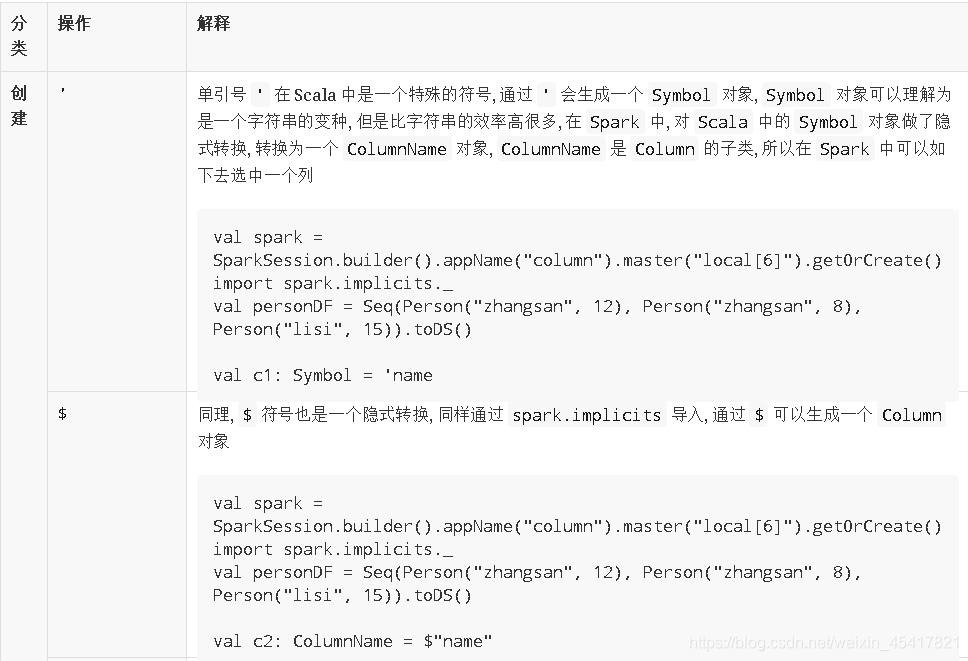

# 06.【转载】Dataset (DataFrame) 的基础操作(二) ### 三、Column 对象 #### 导读 > Column 表示了 Dataset 中的一个列, 并且可以持有一个表达式, 这个表达式作用于每一条数据, 对每条数据都生成一个值, 之所以有单独这样的一个章节是因为列的操作属于细节, 但是又比较常见, 会在很多算子中配合出现     **全套代码展示:** ```scala package com.spark.transformation import com.spark.Person import org.apache.log4j.{Level, Logger} import org.apache.spark.sql import org.apache.spark.sql.{ColumnName, DataFrame, Dataset, SparkSession} import org.junit.Test class Column { Logger.getLogger("org").setLevel(Level.ERROR) val spark: SparkSession = SparkSession.builder() .master("local[6]") .appName(this.getClass.getSimpleName) .getOrCreate() import spark.implicits._ import org.apache.spark.sql.functions._ @Test def creation(): Unit ={ val ds: Dataset[Person] = Seq(Person("zhangsan", 20),Person("zhangsan", 18), Person("lisi", 15)).toDS val df: DataFrame = Seq(Person("zhangsan", 20),Person("zhangsan", 18), Person("lisi", 15)).toDF //1. ' val column:Symbol = 'name //2. $ val column1: ColumnName = $"name" //3. col (必须导入functions)import org.apache.spark.sql.functions._ val column2: sql.Column = col("name") //4. column (必须导入functions) val column3: sql.Column = column("name") /** * 这四种创建方式有关联的DataSet么 */ ds.select(column).show() //DataSet可以 DataFrame 可以使用 column 对象选中行吗 df.select(column).show() //select 方法可以使用column 对象来选中某个列,那么其他的算子行么 df.where(column === "zhangsan").show() /** * column 有几个创建方式? -> 四种 * column 对象可以用作与DataSet 和 DataFrame 中 * column 可以和命令式的弱类型的API 配合使用select where * */ //5. dataset.col val column4 = ds.col("name") val column5 = df.col("name") //报错 //ds.select(column5).show() //为什么要和dataset 来绑定呢 // ds.join(df,ds.col("name") === df.col("name")) //6.dataset.apple val column6 = ds.apply("name") val column7 = ds("name") } @Test def as(): Unit ={ val ds: Dataset[Person] = Seq(Person("zhangsan", 20),Person("zhangsan", 18), Person("lisi", 15)).toDS ds.select ('name as "new_name").show() ds.select('age.as[Long]).show() } @Test def api(): Unit ={ val ds: Dataset[Person] = Seq(Person("zhangsan", 20),Person("zhangsan", 18), Person("lisi", 15)).toDS //需求一:ds增加列,双倍年龄 // age*2 其实本质上就是是将一个表达式(逻辑计划表达式) 附着到column对象上 //表达式在执行的时候对应每一条数据进行操作 ds.withColumn("doubled",'age * 2).show() //需求二:模糊查询 //select * from table where name like zhang% ds.where('name like "zhang%") //需求三:排序,正反序 ds.sort('age asc).show() //需求四:枚举判断 ds.where('name isin("zhangsan","wangwu","zhaoliu")).show() } } case class Person(name:String,age:Int) ``` ### 四、缺失值处理 #### **导读** > 1. DataFrame 中什么时候会有无效值 > 2. DataFrame 如何处理无效的值 > 3. DataFrame 如何处理 null **缺失值的处理思路** 如果想探究如何处理无效值, 首先要知道无效值从哪来, 从而分析可能产生的无效值有哪些类型, 在分别去看如何处理无效值 #### 4.1 **什么是缺失值** 一个值本身的含义是这个值不存在则称之为缺失值, 也就是说这个值本身代表着缺失, 或者这个值本身无意义, 比如说 null, 比如说空字符串  关于数据的分析其实就是统计分析的概念, 如果这样的话, 当数据集中存在缺失值, 则无法进行统计和分析, 对很多操作都有影响 #### 4.2 **缺失值如何产生的**  Spark 大多时候处理的数据来自于业务系统中, 业务系统中可能会因为各种原因, 产生一些异常的数据 例如说因为前后端的判断失误, 提交了一些非法参数. 再例如说因为业务系统修改 MySQL 表结构产生的一些空值数据等. 总之在业务系统中出现缺失值其实是非常常见的一件事, 所以大数据系统就一定要考虑这件事. #### 4.3 缺失值的类型 常见的缺失值有两种 - null, NaN 等特殊类型的值, 某些语言中 null 可以理解是一个对象, 但是代表没有对象, NaN 是一个数字, 可以代表不是数字 针对这一类的缺失值, Spark 提供了一个名为 DataFrameNaFunctions 特殊类型来操作和处理 + Null”, “NA”, " " 等解析为字符串的类型, 但是其实并不是常规字符串数据 针对这类字符串, 需要对数据集进行采样, 观察异常数据, 总结经验, 各个击破 #### 4.4 **DataFrameNaFunctions** DataFrameNaFunctions 使用 Dataset 的 na 函数来获取 ```scala val df = ... val naFunc: DataFrameNaFunctions = df.na ``` 当数据集中出现缺失值的时候, 大致有两种处理方式, 一个是丢弃, 一个是替换为某值, DataFrameNaFunctions 中包含一系列针对空值数据的方案 + DataFrameNaFunctions.drop 可以在当某行中包含 null 或 NaN 的时候丢弃此行 + DataFrameNaFunctions.fill 可以在将 null 和 NaN 填充为其它值 + DataFrameNaFunctions.replace 可以把 null 或 NaN 替换为其它值, 但是和 fill 略有一些不同, 这个方法针对值来进行替换 #### 4.5 **如何使用 SparkSQL 处理 null 和 NaN ?** 首先要将数据读取出来, 此次使用的数据集直接存在 NaN, 在指定 Schema 后, 可直接被转为 Double.NaN ```scala val schema = StructType( List( StructField("id", IntegerType), StructField("year", IntegerType), StructField("month", IntegerType), StructField("day", IntegerType), StructField("hour", IntegerType), StructField("season", IntegerType), StructField("pm", DoubleType) ) ) val df = spark.read .option("header", value = true) .schema(schema) .csv("input/beijingpm_with_nan.csv") ``` 对于缺失值的处理一般就是丢弃和填充 #### 4.6 **丢弃包含 null 和 NaN 的行** 当某行数据所有值都是 null 或者 NaN 的时候丢弃此行 ```scala df.na.drop("all").show() ``` 当某行中特定列所有值都是 null 或者 NaN 的时候丢弃此行 ```scala df.na.drop("all", List("pm", "id")).show() ``` 当某行数据任意一个字段为 null 或者 NaN 的时候丢弃此行 ```scala df.na.drop().show() df.na.drop("any").show() ``` 当某行中特定列任意一个字段为 null 或者 NaN 的时候丢弃此行 ```scala df.na.drop(List("pm", "id")).show() df.na.drop("any", List("pm", "id")).show() ``` #### 4.7 **填充包含 null 和 NaN 的列** 填充所有包含 null 和 NaN 的列 ```scala df.na.fill(0).show() ``` 填充特定包含 null 和 NaN 的列 ```scala df.na.fill(0, List("pm")).show() ``` 根据包含 null 和 NaN 的列的不同来填充 ```scala import scala.collection.JavaConverters._ df.na.fill(Map[String, Any]("pm" -> 0).asJava).show ``` #### 4.8 **如何使用 SparkSQL 处理异常字符串 ?** 读取数据集, 这次读取的是最原始的那个 PM 数据集 ```scala val df = spark.read .option("header", value = true) .csv("dataset/BeijingPM20100101_20151231.csv") ``` 使用函数直接转换非法的字符串 ```scala df.select('No as "id", 'year, 'month, 'day, 'hour, 'season, when('PM_Dongsi === "NA", 0) .otherwise('PM_Dongsi cast DoubleType) .as("pm")) .show() ``` 使用 where 直接过滤 ```scala df.select('No as "id", 'year, 'month, 'day, 'hour, 'season, 'PM_Dongsi) .where('PM_Dongsi =!= "NA") .show() ``` 使用 DataFrameNaFunctions 替换, 但是这种方式被替换的值和新值必须是同类型 ```scala df.select('No as "id", 'year, 'month, 'day, 'hour, 'season, 'PM_Dongsi) .na.replace("PM_Dongsi", Map("NA" -> "NaN")) .show() ``` **全套代码如下:** 数据准备:(beijingpm_with_nan.csv) ``` id,year,month,day,hour,season,pm 1,2010,1,1,0,4,NaN 2,2010,1,1,1,4,NaN 3,2010,1,1,2,4,NaN 4,2010,1,1,3,4,NaN 5,2010,1,1,4,4,NaN 6,2010,1,1,5,4,NaN 7,2010,1,1,6,4,NaN 8,2010,1,1,7,4,NaN 9,2010,1,1,8,4,NaN 10,2010,1,1,9,4,NaN 11,2010,1,1,10,4,NaN 12,2010,1,1,11,4,NaN 13,2010,1,1,12,4,NaN 14,2010,1,1,13,4,NaN 15,2010,1,1,14,4,NaN 16,2010,1,1,15,4,NaN 17,2010,1,1,16,4,NaN 18,2010,1,1,17,4,NaN 19,2010,1,1,18,4,NaN 20,2010,1,1,19,4,NaN 21,2010,1,1,20,4,NaN 22,2010,1,1,21,4,NaN 23,2010,1,1,22,4,NaN 24,2010,1,1,23,4,NaN 25,2010,1,2,0,4,NaN 26,2010,1,2,1,4,NaN 27,2010,1,2,2,4,NaN 28,2010,1,2,3,4,NaN 29,2010,1,2,4,4,NaN 30,2010,1,2,5,4,NaN 31,2010,1,2,6,4,NaN 32,2010,1,2,7,4,NaN 33,2010,1,2,8,4,NaN 34,2010,1,2,9,4,NaN 35,2010,1,2,10,4,NaN 36,2010,1,2,11,4,NaN 37,2010,1,2,12,4,NaN 38,2010,1,2,13,4,NaN 39,2010,1,2,14,4,NaN 40,2010,1,2,15,4,NaN 41,2010,1,2,16,4,NaN 42,2010,1,2,17,4,NaN 43,2010,1,2,18,4,NaN 44,2010,1,2,19,4,NaN 45,2010,1,2,20,4,NaN 46,2010,1,2,21,4,NaN 47,2010,1,2,22,4,NaN 48,2010,1,2,23,4,NaN 49,2010,1,3,0,4,NaN 50,2010,1,3,1,4,NaN 51,2010,1,3,2,4,NaN 52,2010,1,3,3,4,NaN 53,2010,1,3,4,4,NaN 54,2010,1,3,5,4,NaN 55,2010,1,3,6,4,NaN 56,2010,1,3,7,4,NaN 57,2010,1,3,8,4,NaN 58,2010,1,3,9,4,NaN 59,2010,1,3,10,4,NaN 60,2010,1,3,11,4,NaN 61,2010,1,3,12,4,NaN 62,2010,1,3,13,4,NaN 63,2010,1,3,14,4,NaN 64,2010,1,3,15,4,NaN 65,2010,1,3,16,4,NaN 66,2010,1,3,17,4,NaN 67,2010,1,3,18,4,NaN 68,2010,1,3,19,4,NaN 69,2010,1,3,20,4,NaN 70,2010,1,3,21,4,NaN 71,2010,1,3,22,4,NaN 72,2010,1,3,23,4,NaN 73,2010,1,4,0,4,NaN 74,2010,1,4,1,4,NaN 75,2010,1,4,2,4,NaN 76,2010,1,4,3,4,NaN 77,2010,1,4,4,4,NaN 78,2010,1,4,5,4,NaN 79,2010,1,4,6,4,NaN 80,2010,1,4,7,4,NaN 81,2010,1,4,8,4,NaN 82,2010,1,4,9,4,NaN 83,2010,1,4,10,4,NaN 84,2010,1,4,11,4,NaN 85,2010,1,4,12,4,NaN 86,2010,1,4,13,4,NaN 87,2010,1,4,14,4,NaN 88,2010,1,4,15,4,NaN 89,2010,1,4,16,4,NaN 90,2010,1,4,17,4,NaN 91,2010,1,4,18,4,NaN 92,2010,1,4,19,4,NaN 93,2010,1,4,20,4,NaN 94,2010,1,4,21,4,NaN 95,2010,1,4,22,4,NaN 96,2010,1,4,23,4,NaN 97,2010,1,5,0,4,NaN 98,2010,1,5,1,4,NaN 99,2010,1,5,2,4,NaN 100,2010,1,5,3,4,NaN 101,2010,1,5,4,4,NaN 102,2010,1,5,5,4,NaN 103,2010,1,5,6,4,NaN 104,2010,1,5,7,4,NaN 105,2010,1,5,8,4,NaN 106,2010,1,5,9,4,NaN 107,2010,1,5,10,4,NaN 108,2010,1,5,11,4,NaN 109,2010,1,5,12,4,NaN 110,2010,1,5,13,4,NaN 111,2010,1,5,14,4,NaN 112,2010,1,5,15,4,NaN 113,2010,1,5,16,4,NaN 114,2010,1,5,17,4,NaN 115,2010,1,5,18,4,NaN 116,2010,1,5,19,4,NaN 117,2010,1,5,20,4,NaN 118,2010,1,5,21,4,NaN 119,2010,1,5,22,4,NaN 120,2010,1,5,23,4,NaN 121,2010,1,6,0,4,NaN 122,2010,1,6,1,4,NaN 123,2010,1,6,2,4,NaN 124,2010,1,6,3,4,NaN 125,2010,1,6,4,4,NaN 126,2010,1,6,5,4,NaN 127,2010,1,6,6,4,NaN 128,2010,1,6,7,4,NaN 129,2010,1,6,8,4,NaN 130,2010,1,6,9,4,NaN 131,2010,1,6,10,4,NaN 132,2010,1,6,11,4,NaN 133,2010,1,6,12,4,NaN 134,2010,1,6,13,4,NaN 135,2010,1,6,14,4,NaN 136,2010,1,6,15,4,NaN 137,2010,1,6,16,4,NaN 138,2010,1,6,17,4,NaN 139,2010,1,6,18,4,NaN 140,2010,1,6,19,4,NaN 141,2010,1,6,20,4,NaN 142,2010,1,6,21,4,NaN 143,2010,1,6,22,4,NaN 144,2010,1,6,23,4,NaN 145,2010,1,7,0,4,NaN 146,2010,1,7,1,4,NaN 147,2010,1,7,2,4,NaN 148,2010,1,7,3,4,NaN 149,2010,1,7,4,4,NaN 150,2010,1,7,5,4,NaN 151,2010,1,7,6,4,NaN 152,2010,1,7,7,4,NaN 153,2010,1,7,8,4,NaN 154,2010,1,7,9,4,NaN 155,2010,1,7,10,4,NaN 156,2010,1,7,11,4,NaN 157,2010,1,7,12,4,NaN 158,2010,1,7,13,4,NaN 159,2010,1,7,14,4,NaN 160,2010,1,7,15,4,NaN 161,2010,1,7,16,4,NaN 162,2010,1,7,17,4,NaN 163,2010,1,7,18,4,NaN 164,2010,1,7,19,4,NaN 165,2010,1,7,20,4,NaN 166,2010,1,7,21,4,NaN 167,2010,1,7,22,4,NaN 168,2010,1,7,23,4,NaN 169,2010,1,8,0,4,NaN 170,2010,1,8,1,4,NaN 171,2010,1,8,2,4,NaN 172,2010,1,8,3,4,NaN 173,2010,1,8,4,4,NaN 174,2010,1,8,5,4,NaN 175,2010,1,8,6,4,NaN 176,2010,1,8,7,4,NaN 177,2010,1,8,8,4,NaN 178,2010,1,8,9,4,NaN 179,2010,1,8,10,4,NaN 180,2010,1,8,11,4,NaN 181,2010,1,8,12,4,NaN 182,2010,1,8,13,4,NaN 183,2010,1,8,14,4,NaN 184,2010,1,8,15,4,NaN 185,2010,1,8,16,4,NaN 186,2010,1,8,17,4,NaN 187,2010,1,8,18,4,NaN 188,2010,1,8,19,4,NaN 189,2010,1,8,20,4,NaN 190,2010,1,8,21,4,NaN 191,2010,1,8,22,4,NaN 192,2010,1,8,23,4,NaN 193,2010,1,9,0,4,NaN 194,2010,1,9,1,4,NaN 195,2010,1,9,2,4,NaN 196,2010,1,9,3,4,NaN 197,2010,1,9,4,4,NaN 198,2010,1,9,5,4,NaN 199,2010,1,9,6,4,NaN ``` (BeijingPM20100101_20151231.csv) ``` No,year,month,day,hour,season,PM_Dongsi,PM_Dongsihuan,PM_Nongzhanguan,PM_US_Post,DEWP,HUMI,PRES,TEMP,cbwd,Iws,precipitation,Iprec 1,2010,1,1,0,4,NA,NA,NA,NA,-21,43,1021,-11,NW,1.79,0,0 2,2010,1,1,1,4,NA,NA,NA,NA,-21,47,1020,-12,NW,4.92,0,0 3,2010,1,1,2,4,NA,NA,NA,NA,-21,43,1019,-11,NW,6.71,0,0 4,2010,1,1,3,4,NA,NA,NA,NA,-21,55,1019,-14,NW,9.84,0,0 5,2010,1,1,4,4,NA,NA,NA,NA,-20,51,1018,-12,NW,12.97,0,0 6,2010,1,1,5,4,NA,NA,NA,NA,-19,47,1017,-10,NW,16.1,0,0 7,2010,1,1,6,4,NA,NA,NA,NA,-19,44,1017,-9,NW,19.23,0,0 8,2010,1,1,7,4,NA,NA,NA,NA,-19,44,1017,-9,NW,21.02,0,0 9,2010,1,1,8,4,NA,NA,NA,NA,-19,44,1017,-9,NW,24.15,0,0 10,2010,1,1,9,4,NA,NA,NA,NA,-20,37,1017,-8,NW,27.28,0,0 11,2010,1,1,10,4,NA,NA,NA,NA,-19,37,1017,-7,NW,31.3,0,0 12,2010,1,1,11,4,NA,NA,NA,NA,-18,35,1017,-5,NW,34.43,0,0 13,2010,1,1,12,4,NA,NA,NA,NA,-19,32,1015,-5,NW,37.56,0,0 14,2010,1,1,13,4,NA,NA,NA,NA,-18,30,1015,-3,NW,40.69,0,0 15,2010,1,1,14,4,NA,NA,NA,NA,-18,28,1014,-2,NW,43.82,0,0 16,2010,1,1,15,4,NA,NA,NA,NA,-18,26,1014,-1,cv,0.89,0,0 17,2010,1,1,16,4,NA,NA,NA,NA,-19,25,1015,-2,NW,1.79,0,0 18,2010,1,1,17,4,NA,NA,NA,NA,-18,30,1015,-3,NW,2.68,0,0 19,2010,1,1,18,4,NA,NA,NA,NA,-18,35,1016,-5,NE,1.79,0,0 20,2010,1,1,19,4,NA,NA,NA,NA,-17,35,1017,-4,NW,1.79,0,0 21,2010,1,1,20,4,NA,NA,NA,NA,-17,38,1017,-5,cv,0.89,0,0 22,2010,1,1,21,4,NA,NA,NA,NA,-17,38,1018,-5,NW,1.79,0,0 23,2010,1,1,22,4,NA,NA,NA,NA,-17,38,1018,-5,NW,2.68,0,0 24,2010,1,1,23,4,NA,NA,NA,129,-17,41,1020,-5,cv,0.89,0,0 25,2010,1,2,0,4,NA,NA,NA,148,-16,38,1020,-4,SE,1.79,0,0 26,2010,1,2,1,4,NA,NA,NA,159,-15,42,1020,-4,SE,2.68,0,0 27,2010,1,2,2,4,NA,NA,NA,181,-11,63.5,1021,-5,SE,3.57,0,0 28,2010,1,2,3,4,NA,NA,NA,138,-7,85,1022,-5,SE,5.36,0,0 29,2010,1,2,4,4,NA,NA,NA,109,-7,85,1022,-5,SE,6.25,0,0 30,2010,1,2,5,4,NA,NA,NA,105,-7,92,1022,-6,SE,7.14,0,0 31,2010,1,2,6,4,NA,NA,NA,124,-7,92,1023,-6,SE,8.93,0,0 32,2010,1,2,7,4,NA,NA,NA,120,-7,85,1024,-5,SE,10.72,0,0 33,2010,1,2,8,4,NA,NA,NA,132,-8,85,1024,-6,SE,12.51,0,0 34,2010,1,2,9,4,NA,NA,NA,140,-7,85,1025,-5,SE,14.3,0,0 35,2010,1,2,10,4,NA,NA,NA,152,-7,85,1026,-5,SE,17.43,0,0 36,2010,1,2,11,4,NA,NA,NA,148,-8,79,1026,-5,SE,20.56,0,0 37,2010,1,2,12,4,NA,NA,NA,164,-8,79,1026,-5,SE,23.69,0,0 38,2010,1,2,13,4,NA,NA,NA,158,-8,79,1025,-5,SE,27.71,0,0 39,2010,1,2,14,4,NA,NA,NA,154,-9,73,1025,-5,SE,31.73,0,0 40,2010,1,2,15,4,NA,NA,NA,159,-9,73,1025,-5,SE,35.75,0,0 41,2010,1,2,16,4,NA,NA,NA,164,-9,73,1026,-5,SE,37.54,0,0 42,2010,1,2,17,4,NA,NA,NA,170,-8,79,1027,-5,SE,39.33,0,0 43,2010,1,2,18,4,NA,NA,NA,149,-8,79,1027,-5,SE,42.46,0,0 44,2010,1,2,19,4,NA,NA,NA,154,-8,79,1028,-5,SE,44.25,0,0 45,2010,1,2,20,4,NA,NA,NA,164,-7,85,1028,-5,SE,46.04,0,0 46,2010,1,2,21,4,NA,NA,NA,156,-7,85,1027,-5,SE,49.17,0,0 47,2010,1,2,22,4,NA,NA,NA,126,-8,85,1028,-6,SE,52.3,0,0 48,2010,1,2,23,4,NA,NA,NA,90,-8,85,1027,-6,SE,55.43,0,0 49,2010,1,3,0,4,NA,NA,NA,63,-7,92,1027,-6,SE,58.56,0.4,0.4 50,2010,1,3,1,4,NA,NA,NA,65,-8,85,1026,-6,SE,61.69,0.5,0.9 51,2010,1,3,2,4,NA,NA,NA,55,-8,92,1026,-7,SE,65.71,0.5,1.4 52,2010,1,3,3,4,NA,NA,NA,65,-8,92,1025,-7,SE,68.84,0.7,2.1 53,2010,1,3,4,4,NA,NA,NA,83,-8,92,1024,-7,SE,72.86,1.2,3.3 54,2010,1,3,5,4,NA,NA,NA,91,-9,92,1024,-8,SE,76.88,0.7,4 55,2010,1,3,6,4,NA,NA,NA,86,-10,85,1024,-8,SE,80.9,1,5 56,2010,1,3,7,4,NA,NA,NA,82,-10,92,1024,-9,SE,84.92,0.7,5.7 57,2010,1,3,8,4,NA,NA,NA,86,-10,92,1024,-9,SE,89.84,0.5,6.2 58,2010,1,3,9,4,NA,NA,NA,78,-11,85,1023,-9,SE,93.86,0.7,6.9 59,2010,1,3,10,4,NA,NA,NA,98,-11,85,1023,-9,SE,97.88,0.4,7.3 60,2010,1,3,11,4,NA,NA,NA,107,-11,85,1022,-9,SE,102.8,0.5,7.8 61,2010,1,3,12,4,NA,NA,NA,90,-11,85,1021,-9,SE,105.93,1.1,8.9 62,2010,1,3,13,4,NA,NA,NA,96,-11,85,1020,-9,SE,111.74,0.5,9.4 63,2010,1,3,14,4,NA,NA,NA,95,-11,85,1020,-9,SE,116.66,0.3,9.7 64,2010,1,3,15,4,NA,NA,NA,86,-11,85,1020,-9,SE,121.58,0.5,10.2 65,2010,1,3,16,4,NA,NA,NA,70,-11,85,1020,-9,SE,124.71,0.2,10.4 66,2010,1,3,17,4,NA,NA,NA,61,-11,85,1020,-9,SE,127.84,0.1,10.5 67,2010,1,3,18,4,NA,NA,NA,53,-11,85,1021,-9,cv,0.89,0.4,10.9 68,2010,1,3,19,4,NA,NA,NA,71,-11,85,1022,-9,cv,1.78,0.3,11.2 69,2010,1,3,20,4,NA,NA,NA,72,-10,92,1022,-9,NW,4.02,0,0 70,2010,1,3,21,4,NA,NA,NA,76,-11,92,1023,-10,NW,7.15,0,0 71,2010,1,3,22,4,NA,NA,NA,73,-11,85,1023,-9,NW,11.17,0,0 72,2010,1,3,23,4,NA,NA,NA,79,-12,92,1023,-11,NW,14.3,0,0 73,2010,1,4,0,4,NA,NA,NA,58,-14,85,1023,-12,NW,16.09,0,0 74,2010,1,4,1,4,NA,NA,NA,25,-16,56,1023,-9,NW,21.9,0,0 75,2010,1,4,2,4,NA,NA,NA,26,-17,56,1024,-10,NW,29.95,0,0 76,2010,1,4,3,4,NA,NA,NA,28,-18,56,1024,-11,NW,39.78,0,0 77,2010,1,4,4,4,NA,NA,NA,26,-19,51,1025,-11,NW,48.72,0,0 78,2010,1,4,5,4,NA,NA,NA,20,-20,51,1026,-12,NW,55.87,0,0 79,2010,1,4,6,4,NA,NA,NA,29,-21,47,1027,-12,NW,64.81,0,0 80,2010,1,4,7,4,NA,NA,NA,26,-21,51,1027,-13,NW,73.75,0,0 81,2010,1,4,8,4,NA,NA,NA,27,-22,46,1028,-13,NW,80.9,0,0 82,2010,1,4,9,4,NA,NA,NA,27,-22,46,1029,-13,NW,90.73,0,0 83,2010,1,4,10,4,NA,NA,NA,25,-22,43,1030,-12,NW,100.56,0,0 84,2010,1,4,11,4,NA,NA,NA,29,-23,39,1031,-12,NW,108.61,0,0 85,2010,1,4,12,4,NA,NA,NA,32,-21,43,1030,-11,NW,117.55,0,0 86,2010,1,4,13,4,NA,NA,NA,28,-20,43,1030,-10,NW,127.38,0,0 87,2010,1,4,14,4,NA,NA,NA,29,-21,40,1030,-10,NW,136.32,0,0 88,2010,1,4,15,4,NA,NA,NA,30,-21,37,1030,-9,NW,145.26,0,0 89,2010,1,4,16,4,NA,NA,NA,30,-21,37,1031,-9,NW,152.41,0,0 90,2010,1,4,17,4,NA,NA,NA,28,-20,47,1032,-11,NW,159.56,0,0 91,2010,1,4,18,4,NA,NA,NA,26,-23,36,1032,-11,NW,165.37,0,0 92,2010,1,4,19,4,NA,NA,NA,31,-21,47,1033,-12,NW,171.18,0,0 93,2010,1,4,20,4,NA,NA,NA,33,-24,36,1034,-12,NW,180.12,0,0 94,2010,1,4,21,4,NA,NA,NA,29,-24,39,1034,-13,NW,187.27,0,0 95,2010,1,4,22,4,NA,NA,NA,31,-24,39,1035,-13,NW,195.32,0,0 96,2010,1,4,23,4,NA,NA,NA,30,-26,38,1035,-15,NW,198.45,0,0 97,2010,1,5,0,4,NA,NA,NA,34,-26,45,1035,-17,NW,201.58,0,0 98,2010,1,5,1,4,NA,NA,NA,27,-26,49,1035,-18,NW,205.6,0,0 99,2010,1,5,2,4,NA,NA,NA,25,-26,53,1035,-19,NW,208.73,0,0 100,2010,1,5,3,4,NA,NA,NA,28,-27,45,1035,-18,NW,213.65,0,0 101,2010,1,5,4,4,NA,NA,NA,28,-27,49,1035,-19,NW,218.57,0,0 102,2010,1,5,5,4,NA,NA,NA,27,-27,38,1034,-16,NE,4.92,0,0 103,2010,1,5,6,4,NA,NA,NA,27,-26,41,1035,-16,NE,8.05,0,0 104,2010,1,5,7,4,NA,NA,NA,27,-27,38,1034,-16,NE,13.86,0,0 105,2010,1,5,8,4,NA,NA,NA,29,-26,41,1035,-16,NE,18.78,0,0 106,2010,1,5,9,4,NA,NA,NA,36,-26,38,1035,-15,NE,24.59,0,0 107,2010,1,5,10,4,NA,NA,NA,30,-25,38,1035,-14,NE,29.51,0,0 108,2010,1,5,11,4,NA,NA,NA,27,-25,35,1035,-13,NE,34.43,0,0 109,2010,1,5,12,4,NA,NA,NA,39,-25,33,1034,-12,NE,39.35,0,0 110,2010,1,5,13,4,NA,NA,NA,41,-24,33,1032,-11,NE,41.14,0,0 111,2010,1,5,14,4,NA,NA,NA,33,-22,39,1032,-11,cv,0.89,0,0 112,2010,1,5,15,4,NA,NA,NA,50,-23,36,1031,-11,NW,1.79,0,0 113,2010,1,5,16,4,NA,NA,NA,56,-24,33,1031,-11,NW,3.58,0,0 114,2010,1,5,17,4,NA,NA,NA,59,-23,36,1031,-11,NW,5.37,0,0 115,2010,1,5,18,4,NA,NA,NA,60,-23,36,1032,-11,NW,7.16,0,0 116,2010,1,5,19,4,NA,NA,NA,84,-22,46,1033,-13,NW,10.29,0,0 117,2010,1,5,20,4,NA,NA,NA,106,-22,43,1033,-12,NW,13.42,0,0 118,2010,1,5,21,4,NA,NA,NA,66,-24,59,1033,-18,NW,16.55,0,0 119,2010,1,5,22,4,NA,NA,NA,50,-22,46,1034,-13,NW,20.57,0,0 120,2010,1,5,23,4,NA,NA,NA,56,-22,59,1033,-16,NW,23.7,0,0 121,2010,1,6,0,4,NA,NA,NA,77,-25,49,1033,-17,NW,26.83,0,0 122,2010,1,6,1,4,NA,NA,NA,50,-25,38,1033,-14,NE,4.02,0,0 123,2010,1,6,2,4,NA,NA,NA,44,-26,35,1034,-14,NE,8.04,0,0 124,2010,1,6,3,4,NA,NA,NA,27,-26,35,1033,-14,NE,13.85,0,0 125,2010,1,6,4,4,NA,NA,NA,28,-26,35,1033,-14,NE,17.87,0,0 126,2010,1,6,5,4,NA,NA,NA,21,-26,35,1033,-14,NE,23.68,0,0 127,2010,1,6,6,4,NA,NA,NA,25,-26,35,1033,-14,NE,28.6,0,0 128,2010,1,6,7,4,NA,NA,NA,20,-26,38,1034,-15,NE,33.52,0,0 129,2010,1,6,8,4,NA,NA,NA,29,-26,35,1034,-14,NE,39.33,0,0 130,2010,1,6,9,4,NA,NA,NA,34,-25,35,1035,-13,NE,44.25,0,0 131,2010,1,6,10,4,NA,NA,NA,42,-25,33,1035,-12,NE,50.06,0,0 132,2010,1,6,11,4,NA,NA,NA,28,-24,33,1035,-11,NE,54.98,0,0 133,2010,1,6,12,4,NA,NA,NA,36,-24,30,1034,-10,NE,59,0,0 134,2010,1,6,13,4,NA,NA,NA,48,-22,36,1033,-10,NW,4.02,0,0 135,2010,1,6,14,4,NA,NA,NA,49,-22,34,1033,-9,NW,5.81,0,0 136,2010,1,6,15,4,NA,NA,NA,52,-22,31,1033,-8,cv,0.89,0,0 137,2010,1,6,16,4,NA,NA,NA,56,-22,31,1033,-8,NW,1.79,0,0 138,2010,1,6,17,4,NA,NA,NA,96,-21,37,1033,-9,NW,3.58,0,0 139,2010,1,6,18,4,NA,NA,NA,75,-22,34,1033,-9,NW,5.37,0,0 140,2010,1,6,19,4,NA,NA,NA,105,-22,50,1034,-14,cv,0.89,0,0 141,2010,1,6,20,4,NA,NA,NA,132,-22,43,1035,-12,NW,1.79,0,0 142,2010,1,6,21,4,NA,NA,NA,93,-21,55,1034,-14,NW,3.58,0,0 143,2010,1,6,22,4,NA,NA,NA,131,-22,59,1035,-16,NW,5.37,0,0 144,2010,1,6,23,4,NA,NA,NA,127,-21,65,1035,-16,NW,7.16,0,0 145,2010,1,7,0,4,NA,NA,NA,130,-21,65,1035,-16,NW,8.95,0,0 146,2010,1,7,1,4,NA,NA,NA,43,-21,65,1035,-16,cv,0.45,0,0 147,2010,1,7,2,4,NA,NA,NA,37,-22,70,1036,-18,cv,1.34,0,0 148,2010,1,7,3,4,NA,NA,NA,30,-23,50,1036,-15,NW,4.02,0,0 149,2010,1,7,4,4,NA,NA,NA,28,-24,50,1035,-16,NW,7.15,0,0 150,2010,1,7,5,4,NA,NA,NA,24,-25,42,1035,-15,NW,10.28,0,0 151,2010,1,7,6,4,NA,NA,NA,23,-24,46,1035,-15,NW,14.3,0,0 152,2010,1,7,7,4,NA,NA,NA,24,-25,35,1036,-13,NE,4.92,0,0 153,2010,1,7,8,4,NA,NA,NA,27,-24,42,1036,-14,NW,4.02,0,0 154,2010,1,7,9,4,NA,NA,NA,40,-23,39,1036,-12,NW,8.94,0,0 155,2010,1,7,10,4,NA,NA,NA,42,-22,39,1036,-11,NW,12.96,0,0 156,2010,1,7,11,4,NA,NA,NA,42,-20,43,1036,-10,NW,16.09,0,0 157,2010,1,7,12,4,NA,NA,NA,55,-21,37,1035,-9,NW,19.22,0,0 158,2010,1,7,13,4,NA,NA,NA,52,-21,34,1034,-8,NW,21.01,0,0 159,2010,1,7,14,4,NA,NA,NA,51,-20,34,1033,-7,NW,22.8,0,0 160,2010,1,7,15,4,NA,NA,NA,57,-20,34,1032,-7,NW,24.59,0,0 161,2010,1,7,16,4,NA,NA,NA,50,-18,44,1032,-8,NW,26.38,0,0 162,2010,1,7,17,4,NA,NA,NA,54,-19,44,1032,-9,NW,28.17,0,0 163,2010,1,7,18,4,NA,NA,NA,67,-19,51,1032,-11,NE,0.89,0,0 164,2010,1,7,19,4,NA,NA,NA,106,-18,56,1033,-11,NW,1.79,0,0 165,2010,1,7,20,4,NA,NA,NA,159,-19,71,1032,-15,NE,1.79,0,0 166,2010,1,7,21,4,NA,NA,NA,198,-19,65,1032,-14,cv,0.45,0,0 167,2010,1,7,22,4,NA,NA,NA,190,-21,55,1032,-14,cv,1.34,0,0 168,2010,1,7,23,4,NA,NA,NA,210,-21,65,1032,-16,cv,2.23,0,0 169,2010,1,8,0,4,NA,NA,NA,195,-21,71,1031,-17,NW,1.79,0,0 170,2010,1,8,1,4,NA,NA,NA,275,-19,77,1031,-16,NW,3.58,0,0 171,2010,1,8,2,4,NA,NA,NA,164,-20,71,1031,-16,NE,0.89,0,0 172,2010,1,8,3,4,NA,NA,NA,110,-19,71,1030,-15,SE,0.89,0,0 173,2010,1,8,4,4,NA,NA,NA,100,-18,77,1030,-15,cv,0.45,0,0 174,2010,1,8,5,4,NA,NA,NA,81,-18,77,1029,-15,cv,1.34,0,0 175,2010,1,8,6,4,NA,NA,NA,71,-18,77,1029,-15,NW,1.79,0,0 176,2010,1,8,7,4,NA,NA,NA,66,-16,78,1029,-13,NE,0.89,0,0 177,2010,1,8,8,4,NA,NA,NA,92,-16,72,1029,-12,SE,0.89,0,0 178,2010,1,8,9,4,NA,NA,NA,135,-16,72,1030,-12,SE,1.78,0,0 179,2010,1,8,10,4,NA,NA,NA,155,-17,56,1030,-10,NE,0.89,0,0 180,2010,1,8,11,4,NA,NA,NA,198,-16,61,1029,-10,cv,0.89,0,0 181,2010,1,8,12,4,NA,NA,NA,250,-16,56,1028,-9,SE,3.13,0,0 182,2010,1,8,13,4,NA,NA,NA,200,-15,57,1026,-8,SE,4.92,0,0 183,2010,1,8,14,4,NA,NA,NA,231,-16,52,1026,-8,cv,0.89,0,0 184,2010,1,8,15,4,NA,NA,NA,250,-16,52,1025,-8,cv,1.78,0,0 185,2010,1,8,16,4,NA,NA,NA,212,-16,52,1025,-8,cv,2.67,0,0 186,2010,1,8,17,4,NA,NA,NA,219,-17,48,1025,-8,SE,0.89,0,0 187,2010,1,8,18,4,NA,NA,NA,227,-17,52,1026,-9,cv,0.45,0,0 188,2010,1,8,19,4,NA,NA,NA,226,-17,61,1026,-11,cv,1.34,0,0 189,2010,1,8,20,4,NA,NA,NA,225,-17,66,1026,-12,cv,1.79,0,0 190,2010,1,8,21,4,NA,NA,NA,168,-18,71,1027,-14,NW,1.79,0,0 191,2010,1,8,22,4,NA,NA,NA,169,-16,56,1027,-9,NW,4.92,0,0 192,2010,1,8,23,4,NA,NA,NA,165,-16,66,1027,-11,NW,6.71,0,0 193,2010,1,9,0,4,NA,NA,NA,159,-17,71,1027,-13,cv,0.89,0,0 194,2010,1,9,1,4,NA,NA,NA,167,-17,71,1027,-13,NW,3.13,0,0 195,2010,1,9,2,4,NA,NA,NA,196,-17,77,1027,-14,NW,4.92,0,0 196,2010,1,9,3,4,NA,NA,NA,169,-17,84,1027,-15,NW,8.05,0,0 197,2010,1,9,4,4,NA,NA,NA,155,-17,71,1027,-13,NW,9.84,0,0 198,2010,1,9,5,4,NA,NA,NA,119,-19,77,1027,-16,NW,13.86,0,0 199,2010,1,9,6,4,NA,NA,NA,106,-18,77,1027,-15,NW,17.88,0,0 ``` **代码:** ```scala package com.spark.transformation import org.apache.log4j.{Level, Logger} import org.apache.spark.sql.SparkSession import org.apache.spark.sql.types._ import org.junit.Test class NullProcessor { Logger.getLogger("org").setLevel(Level.ERROR) val spark: SparkSession = SparkSession.builder() .master("local[6]") .appName(this.getClass.getSimpleName) .getOrCreate() @Test def nullAndNaN(): Unit = { // 2. 导入数据集 // 3. 读取数据集 // 1. 通过Saprk-csv自动的推断类型来读取, 推断数字的时候会将 NaN 推断为 字符串 // spark.read // .option("header", true) // .option("inferSchema", true) // .csv(...) // 2. 直接读取字符串, 在后续的操作中使用 map 算子转类型 // spark.read.csv().map( row => row... ) // 3. 指定 Schema, 不要自动推断 val schema = StructType( List( StructField("id", LongType), StructField("year", IntegerType), StructField("month", IntegerType), StructField("day", IntegerType), StructField("hour", IntegerType), StructField("season", IntegerType), StructField("pm", DoubleType) ) ) val sourceDF = spark.read .option("header", value = true) .schema(schema) .csv("E:\\Project\\Spark\\spark-sql\\input\\beijingpm_with_nan.csv") sourceDF.show() // 4. 丢弃 // 2019, 12, 12, NaN // 规则: // 1. any, 只有有一个 NaN 就丢弃 sourceDF.na.drop("any").show() sourceDF.na.drop().show() // 2. all, 所有数据都是 NaN 的行才丢弃 sourceDF.na.drop("all").show() // 3. 某些列的规则 sourceDF.na.drop("any", List("year", "month", "day", "hour")).show() // 5. 填充 // 规则: // 1. 针对所有列数据进行默认值填充 sourceDF.na.fill(0).show() // 2. 针对特定列填充 sourceDF.na.fill(0, List("year", "month")).show() } @Test def strProcessor(): Unit = { // 读取数据集 val sourceDF = spark.read .option("header", value = true) .option("inferSchema", value = true) .csv("E:\\Project\\Spark\\spark-sql\\input\\BeijingPM20100101_20151231.csv") // sourceDF.show() // 1. 丢弃 import spark.implicits._ // sourceDF.where('PM_Dongsi =!= "NA").show() // 2. 替换 import org.apache.spark.sql.functions._ // select name, age, case // when ... then ... // when ... then ... // else sourceDF.select( 'No as "id", 'year, 'month, 'day, 'hour, 'season, when('PM_Dongsi === "NA", Double.NaN) .otherwise('PM_Dongsi cast DoubleType) .as("pm") ).show() // 原类型和转换过后的类型, 必须一致 sourceDF.na.replace("PM_Dongsi", Map("NA" -> "NaN", "NULL" -> "null")).show() } } ``` *附:原文链接地址* https://blog.csdn.net/weixin_45417821/article/details/108472687

标签:

Spark

,

Spark SQL

非特殊说明,本博所有文章均为博主原创。

如若转载,请注明出处:

https://lilinchao.com/archives/1345.html

上一篇

05.【转载】Dataset (DataFrame) 的基础操作(一)

下一篇

07.RDD、DataFrame和DataSet对比与转换

评论已关闭

栏目分类

随笔

2

Java

326

大数据

229

工具

31

其它

25

GO

47

NLP

4

标签云

Spark Core

ClickHouse

Http

LeetCode刷题

容器深入研究

Git

Java

Zookeeper

Filter

MySQL

Kafka

Scala

Golang

锁

排序

Docker

DataWarehouse

前端

Spark Streaming

链表

Jquery

数据结构

工具

算法

Java工具类

JavaWEB项目搭建

Nacos

数据结构和算法

线程池

国产数据库改造

友情链接

申请

范明明

庄严博客

Mx

陶小桃Blog

虫洞

评论已关闭